Cote.js

29 Sep 2014Last weekend (2014-09-27), I attended JsIst event. And the event was very impressive for me. There were lots of interesting subject and I met lots of new friends. You can find presentations on event website. I have especially been interested in a presentation, which is “Scaling Node.js Applications with Redis, RabbitMQ and cote.js” was presented by Armagan Amcalar.

I am new on NodeJS but I tried to use sockets, cache systems on my projects.

And it is very entertaining subjects for me. I used

zmq library which is

ØMQ nodejs library. I tried to handle mysql too many

connection error with zmq. I created a tunel and lots of requester connect to a

responder. But I think, ZeroMQ is not very easy to use. I spent lots of time to

install on ubuntu server which is the easiest server to install something.

apt-get is complete solution to lots of things. Finally, I installed on my

server but my development machine (local computer) is MacOSx and I could not

install on it. I had to install vagrant to create a development platform, is

the same my ubuntu server. And all of them was only to start project. I didn’t

want to extend more. It was exhausting.

In JSIst presentation, Armagan Amcalar talked about how we can scaling NodeJS application with cote.js, RabbitMQ and Redis. All three are good tools and solution to scale and another lots of things. I decided to try cote.js to my project because it was zeroconf. And following days, I will try to create a solution for my older mysql too many connection error. There were an example, was almost the same with mine, on its own repository And to see how it works, I created a basic example which is below:

$ sudo npm install cote.js

That’s all.

And I am starting use it. Here is my little sample:

There are some mechanism to communicate, I choose request/respond mechanism on

my example. You can create a responder basicly. You should just set a name and

you can set a respondsTo parameter to separate your answers to many responder,

or to merge your responds to one responder. I used one responder to many

requester, to handle all requests from one source.

// responder.js

var Responder = require('cote').Responder;

var responder_counter = 0;

var randomResponder = new Responder({

name: 'counterRep', // You can use this parameter separate your responds.

respondsTo: ['counterRequest']

});

randomResponder.on('counterRequest', function(req, cb) {

var answer = responder_counter++;

// You can getting data from database and sending to requester

console.log('request', req.val, 'answering with', answer);

cb(answer);

});

The below is one of my requester. In my requester, I am getting data from responder and process it and sending somewhere etc. My other requester is the same on my example, I changed only time interval value. It is 100ms for requester2.js file.

// requester1.js

var Requester = require('cote').Requester;

var Request = new Requester({

name: 'counterReq',

requests: ['counterReq']

});

Request.on('ready', function() {

var counter = 0;

setInterval(function() {

var req = {

type: 'counterRequest',

val: counter++

};

Request.send(req, function(res) {

// Process data and do someting

console.log('request', req, 'answer', res);

});

}, 200);

});

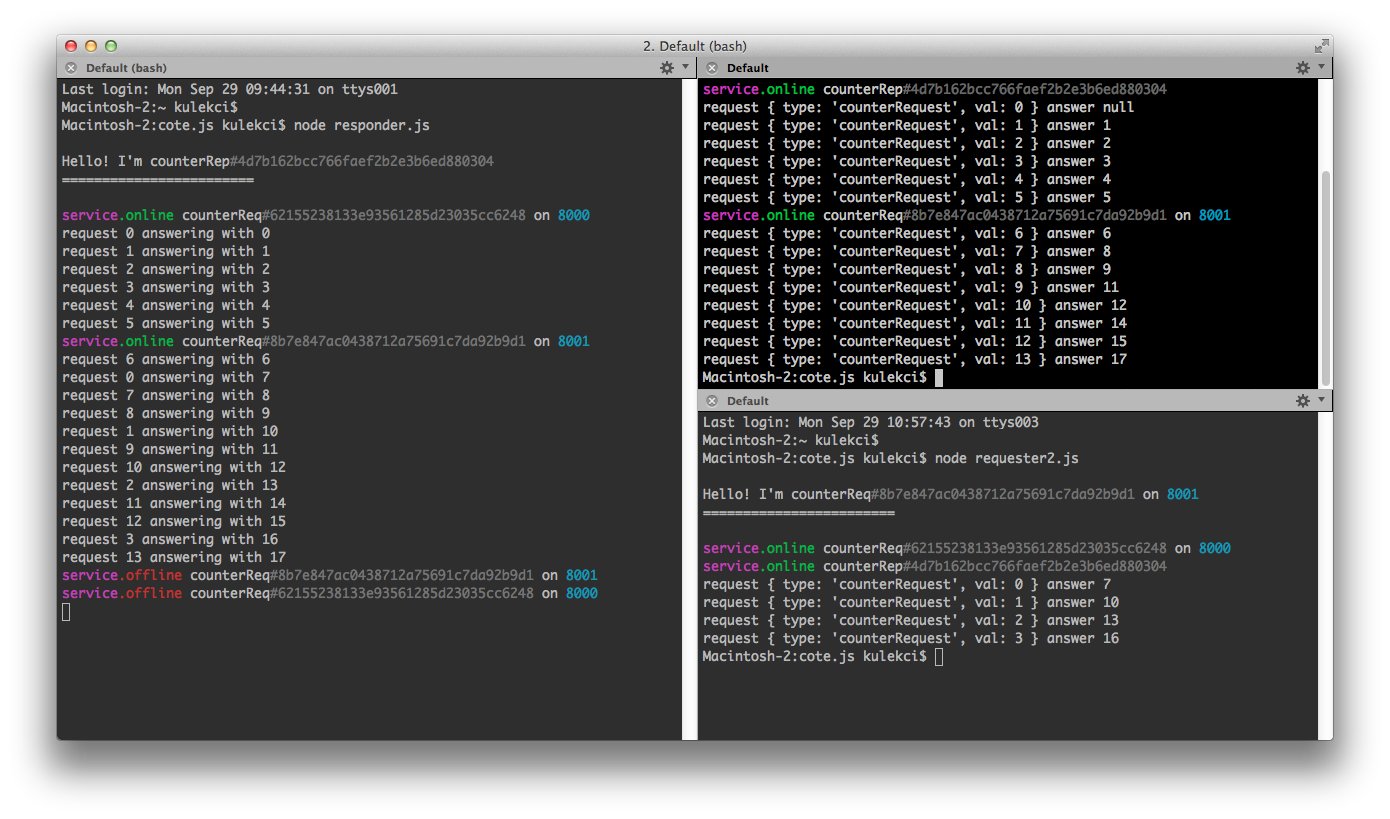

That’s it. I did not configure lots of things. I did not bother on installation or configuration ip, port, … etc. I only use it. That’s cool. And they have a cute and colorful logs on terminal. Here is my screenshot.

I added some comments on my basic example. I will add there following days some codes and try to develop more complex structure.

You can find cote.js on https://github.com/dashersw/cote.

Share you opinion with us :

If you want to say something or share your opinion about this blog post, please create an issue on Github. Or you can also contribute this article with pull request. You can be sure I'll look that.